Book Review: Thinking in Systems

Donella Meadows introduces the concept of systems thinking. It is a useful tool to understanding the world.

Starting with the behavior of the system directs one’s thoughts to dynamic, not static, analysis—not only to “What’s wrong?” but also to “How did we get there?”

Donella Meadows’s Thinking in Systems is a classic in the field of management and strategic thinking. The book first came out in 1993 and it has aged well. Why is it worth understanding systems? So you can design them well. Systems define the world.

Defining Systems

To understand systems thinking, we need to first define what we mean by system. A system is, basically, anything that “is a set of things… [that are] interconnected in such a way that they produce their own pattern of behavior over time.” You’re not alone in thinking that this definition is incredibly vague. This is both an opportunity and a challenge in understanding systems.

It is an art to define the boundaries of a system. You can think of a system as a mental model of the world. You need to make the model as simple as possible, but no simpler.

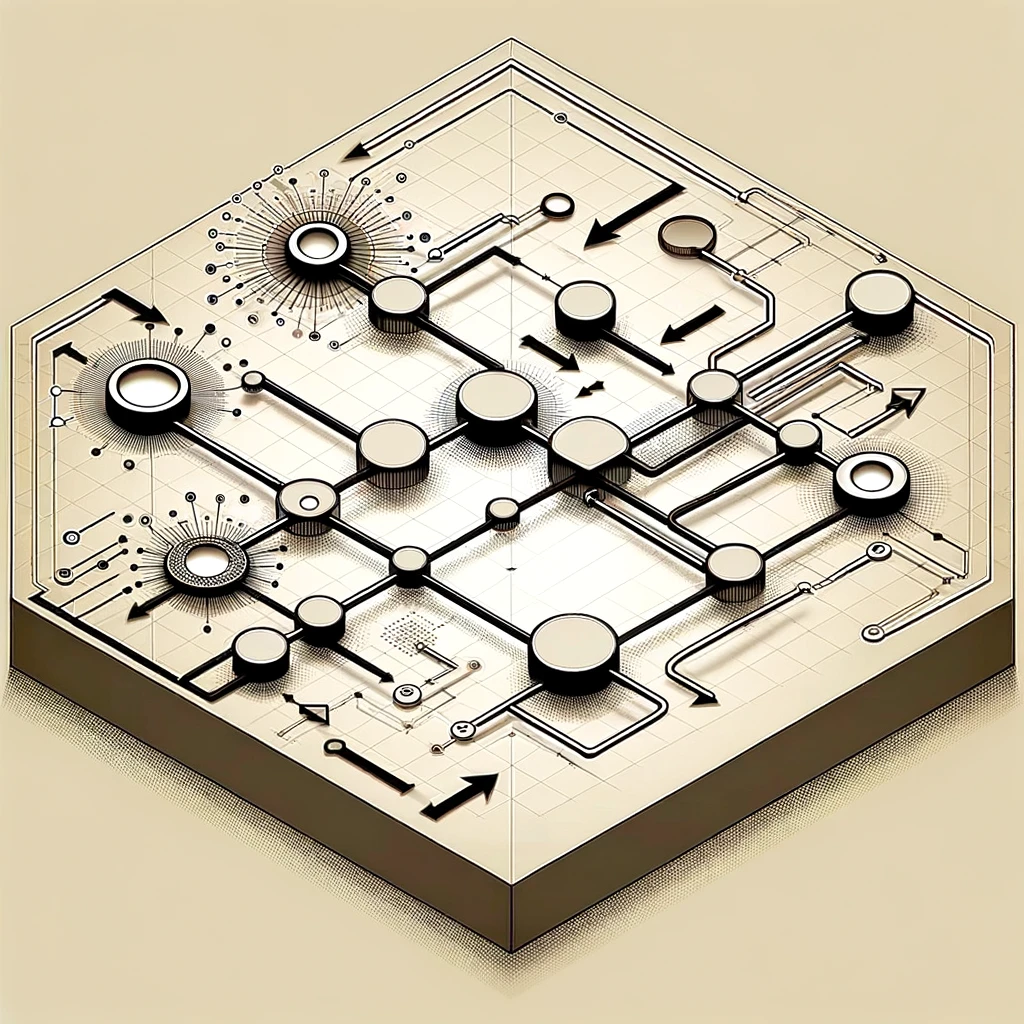

To make this a bit more concrete, let’s start with a very simple example of fish (say North Atlantic Haddock). The basic elements of a system diagram are the stocks and flows. In our example, the stock would correspond to the population of Haddock and the flows would correspond to the inflows (number of new fish spawned each cycle) and outflows (the number of fish that die off or are fished each cycle). A very simple system diagram would look like this:

Note: the inflows here are a function of the existing stock – this is not always true for single-stock systems

In this very simple system diagram, you can start to model how the population of Haddock will evolve depending on some simple variables such as the spawning rate and the fishing rate. Variables that affect the spawning rate could include the climate and the availability of nutrients. The amount fished could be influenced by the price and technology which makes fishermen more efficient. Another outflow source, not listed here, is if some kind of disease causes the fish to disproportionately die out.

Even in this very simple system, we are starting to see there is some discretion in what to include. If you’re creating this model to understand the impact of a policy decision (no fishing in January), you could then estimate what might happen to each of the flows to eventually affect the level of the stock over time. Many conservation efforts do this. Like all models, you’ll need to be thorough about what to include otherwise your model may be missing something very basic about the system you’re trying to influence. If you need to extend this example, the spawning rate could be its own stock, and the change in rate could be seen as flows.

Feedback Loops

System thinking starts to give you the vocabulary and tools to think about feedback loops. What moves in response to your intervention? What moves against it? How does timing affect your attempts to change the system? As Meadows notes:

Because of feedback delays within complex systems, by the time a problem becomes apparent it may be unnecessarily difficult to solve. — A stitch in time saves nine.

Feedback, by definition, cannot affect the current stock. It can only affect future levels of stock through changes in flow. If you want your system to be as responsive to feedback as possible, you will want to minimize the delay between learning the information in the feedback and taking some action to affect the flow. The principle here is intrinsic responsibility. Examples of systems design with intrinsic responsibility include:

- A general riding out to war with his troops and a captain goes down with his ship

- A pilot at the front of a plane

- A thermostat in each room, set to an ideal temperature

- A democracy where leaders are held directly accountable for their decisions

- A market where companies gain or lose share based on the quality of their product (for this to be true though, the quality must be observable and known)

- A carbon tax to avoid the externality of CO2

Readers may observe that there are tradeoffs in designing systems well. All optimizations have this property. If your goal is to increase a stock in something and it’s not increasing then you likely have something fundamentally missing in your system design.

Keep in mind that all systems and all agents in any system suffer from bounded rationality. There might be an optimal behavior and it might not happen. You as the system modeler also suffer from this. Maybe the incentives at one layer are countering the broader organization goals. Similarly for information flows, stresses, and constraints.

Constraints and Non-Linearities

All systems are affected by constraints. If you want to increase something, you will need to identify the limiting factor. Sometimes this is unintuitive and you should pay close attention to when your system behaves in a way that doesn’t match your mental model. Many times this will be in the form of a non-linear constraint: one that doesn’t always have a consistent dose-effect-response.

Meadows puts this well in developmental economics:

Rich countries transfer capital or technology to poor ones and wonder why the economies of the receiving countries still don’t develop, never thinking that capital or technology may not be the most limiting factors.

A company may richly resource its key, strategic project only to see it fail. This is an example of a non-linear constraint in action. The optimal number of people might be the two pizza number. Increasing a resource (people) may actually decrease the output. If you think in system terms, it will have you identify all of the key inflows and outflows. If you are able to rigorously measure and benchmark, then you will have a starting point for diagnosis.

One of my favorite system designs I’ve seen is the National Football League in the United States. For those who are less familiar, it is a competition of 32 professional teams and the most lucrative sports league in the world. The ultimate stock in this system is the level of interest. A key inflow is the level of competition. The rich-get-richer is a fact of life in sports: the best teams have the most revenue and can afford the best players. This leads to more revenue and the ability to afford even better players (see professional soccer). To break this loop, the NFL instituted a salary cap which has meant that the rich-do-not-get-richer (except for facilities and coaching staff). This system redesign has led it to be the most successful league in the world and the event holding the cable bundle together.

Traps and Mitigations

Meadows outlines several types of traps and ways to mitigate them in system design. We talked about the rich-get-richer above in the NFL example.

Keep in mind you may face policy resistance in any solution. You will need to iterate creatively to work through them all including potentially giving up control in that area. Policy resistance is anything you see happen due to the incentives, constraints, and other factors of bounded rationality in the system.

I encourage you to read the book to learn more about all of the traps. For now I will highlight two that stood out to me:

- Tragedy of the commons – an insufficient feedback loop to reduce some negative behavior. Mitigations include education/exhortation, privatization, and regulation. All of them come with their downsides in effectiveness and costs.

- Seeking the wrong goal – goals are the measurement of sub-components of the system. If you have the wrong goal in place, it’s very hard to get to the system to maximize the true objective. The mitigation is to pay very close attention to what goals are and what is rewarded (the real goal). Be very wary of Goodhart’s law. Think deeply about what you reward. I’ve seen a trap in leaders who state they value one thing but in practice value another.

A Concrete Example – Hiring Pipeline

Now that we have the basics, let’s work through an example of a hiring pipeline:

Note: there are more steps and potential mitigations than those listed here

If your org is not scaling then at some level you don’t have enough employees contributing value. In this simplified system diagram, we’re just looking until someone is ramped up well in a new role. Caveat: there are many reasons why an experienced employee may not contribute optimally but that’s out of scope here. (Systems need boundaries to be tractable!). Analysis of your process will help you identify which part of the system would need the most investment, with different solutions. Many companies may immediately see pay more as the primary lever for increasing the pipeline. However a system approach will identify many other potential factors which could be more effective.

A System Thinker

Now you’re on your way to being a system thinker! Here are some parting thoughts I would leave you with:

- Understand how the world works, generally. Charlie Munger has very similar advice in learning all of the big ideas from the disciplines. Your attempt at a system diagram will be limited if you do not understand enough about the world to build a reasonable model.

- Appreciate ambiguity. Nothing is perfectly clear and there’s a lot of judgment in thinking through systems. Learn well from every iteration.

- Do not forget that decreasing outflows is an option!

- Sometimes less is more: by controlling less, you can get the system to optimize the few things that really matter.

Life is unbelievably complex. System thinking is one of the best tools to help you make sense of it.

Here are some quotes I liked from the book:

You can see why nonlinearities produce surprises. They foil the reasonable expectation that if a little of some cure did a little good, then a lot of it will do a lot of good—or alternately that if a little destructive action caused only a tolerable amount of harm, then more of that same kind of destruction will cause only a bit more harm.

The alternative to overpowering policy resistance is so counterintuitive that it’s usually unthinkable. Let go. Give up ineffective policies. Let the resources and energy spent on both enforcing and resisting be used for more constructive purposes.